Azure Functions - first encounter

During the summer I was working on a project with students for a pro bono customer. The project uses Azure Functions as back-end for a mobile application created using react-native. This was my first encounter with Azure Functions and serverless as a whole, which has been an entirely positive experience. Especially working with a time limited project and with student without much experience building production-ready applications - Azure Functions supercharged the process! By not having to spend time on dotnet boilerplate stuff and best practices, we were able to spend time on what actually matters - writing code solving the business problems.

In this post I will briefly present Azure Functions as well as my initial experiences and aha-moments.

A main goal with Azure Functions, and serverless in general, is to make configuration and management easier, so that developers can focus on writing business logic and solving problems faster. Serverless service providers makes everything that usually requires knowledge and boilerplate, "just work". Handling HTTP request, integrating with other cloud services (storage and transport), as well as scaling and security are handled with barely any configuration.

Triggers and bindings

A function consist of a trigger and optionally one or multiple input and output bindings. The framework provide a whole suite of bindings making integration with other Azure offerings a piece of cake. The function below is triggered by a HTTP request and has a table storage output binding. The only configuration required for this to work is an Azure Function app with a valid Azure storage account connection string. The most used Trigger and Bindings are standard storage bindings including CosmosDb, Azure Storage and Azure ServiceBus, but there are also a lot of more niche stuff like OneDrive triggers and Twilio bindings.

A simple function handling a HTTP request and writing a new entity to Azure table storage is implemented as follows:

[FunctionName("RegisterWine")]

public static async HttpResponseMessage Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = null)]

HttpRequestMessage req,

[Table("WineInventory")] out InventoryWine newWine,

TraceWriter log)

{

dynamic data = await req.Content.ReadAsAsyncobject();

newWine = new InventoryWine(data.vinmonopoletId);

var responseContent = newStringContent(JsonConvert.SerializeObject(newWine));

return new HttpResponseMessage(HttpStatusCode.OK)

{

Content = responseContent

};

}

Another useful trigger is the TimerTrigger which can be used to created functions triggered on a schedule, using cron expressions. A timer triggered function is created in the same way as previously, with a timer as input instead of the HTTP request. The trigger time is configured using cron expressions. These are strings in a specific format ({second} {minute} {hour} {day} {month} {day-of-week}) with parameters for seconds, minutes, hour, day, month and day of week. In this example the trigger is configured to run 09.30 every day of the year.

[FunctionName("UpdateVinmonopoletRepo")]

public static void Run(

[TimerTrigger("0 30 9 * * *")]TimerInfo myTimer,

TraceWriter log)

{

log.Info("Function trigger on a timed schedule");

}

Function interaction

Building applications with Azure Functions introduce a quite different programming model, with focus on business logic, instead of complicated abstractions and patterns. Interaction with storage or other services is done through simple abstractions (even using out variables (!)). It is often desirable to have multiple functions co-operating, e.g. when doing multiple cloud operations triggered by the same request or event. Since any operation might fail it is advisable to do each operation in its own function, and try to keep the functions' logic idempotent. This is important since failures and outages will happen, which might cause functions to be interrupted mid execution, possibly after executing only one of multiple storage operations.

This is usually solved using storage queues as communication between functions. Multiple functions can be in the same static class, making the functions and the interaction between them easy to read and follow. The following example consists of one HTTP triggered function writing a queue message, and a queue triggered function inserting an entity into table storage.

[FunctionName("StoreObject")]

public static async Task<HttpResponseMessage> StoreObjectRequestHandler(

[HttpTrigger(AuthorizationLevel.Anonymous, "post")] HttpRequestMessage req,

[Queue("SaveObject")] IAsyncCollector<SaveObjectMessage> queue, TraceWriter log)

{

dynamic data = await req.Content.ReadAsAsync<object>();

await queue.AddAsync(new SaveObjectMessage() { Id = data.Id });

return new HttpResponseMessage(HttpStatusCode.OK);

}

[FunctionName("SaveObject")]public static async Task SaveObjectMessageHandler(

[QueueTrigger("SaveObject")] SaveObjectMessage message,

[Table("MyObjects")]CloudTable myObjectsTable, TraceWriter log)

{

await myObjectsTable.ExecuteAsync(TableOperation.Insert(new MyObjectEntity(message.Id.ToString()));

}

Development

Getting started with a new Azure Functions app is very simple, all the tools needed are included in the azure functions core tools. These tools can be installed via all the common package repositories, including nuget, npm, homebrew etc. The included cli provides commands to create and develop Azure Functions in a breeze.

To get started run func init, which will create a new project and two configuration files: host.json and local.settings.json. To be able to use bindings (table storage, queues etc.) a storage account connection string has to be configured in the loca.settings.json-file, this is only relevant for local development as this setting will be configured in the function app after deployment. Either use the Azure storage emulator connection string (only on Windows) or create a storage account to be used only for local development (remember to not check in the connection string). The file should look as follows, note that the runtime configuration can be dotnet, java or node.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"AzureWebJobsDashboard": "",

"FUNCTIONS_WORKER_RUNTIME": "dotnet"

}

}

To run the functions runtime on unix operating systems the project has to be configured to use the runtime version 2, this is done by updating the project file adding the following lines.

<TargetFramework>netstandard2.0</TargetFramework>

<AzureFunctionsVersion>v2</AzureFunctionsVersion>

Now running func start should start successfully. There might be a warning if no connection string is configured, either in the config file or in the AzureWebJobsStorage-environment variable. For now there are no functions to be run, creating one can be done with the func new-command, choose HTTP trigger. This will create a new function which can be triggered over HTTP. Start the functions runtime host with func start --build.

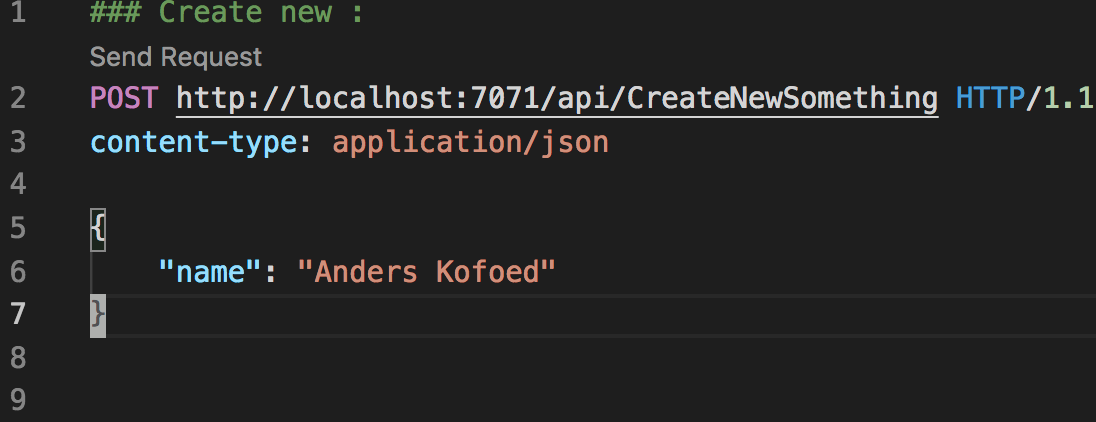

To test HTTP functions I prefer to use RestClient extension for VSCode. This simple little extension makes use of plain text files with the .http-postfix where request examples can be defined and run.

Final words

Serverless and Azure Functions are essentially a great environment to build business applications, allowing rapid development and agility without all the boilerplate usually required when working with e.g. .net core apps. It is different in how code is structured and how connections to storage/transport and third party services are managed, with focus being on delivering running code as soon as possible.

My experience is that serverless allows business applications to be developed much faster than with other architectural models. Standard cloud applications tend to be very opinionated in how code is structured and how infrastructure is managed, most of these "problems" are solved by the serverless runtime itself and time can be spent on solving problems instead of discussing style.